The keynote is over. The sizzle reel has cooled. And while Apple’s WWDC 2025 keynote delivered one of the most feature-dense presentations in years, it also left a lingering question in the air—is Apple really serious about GenAI, or are they still playing it safe behind the glass?

Let’s break it down.

A Design Renaissance… with Limits

The introduction of the Liquid Glass design system marks Apple’s first major UI redesign since iOS 7, and it’s a beautiful, ambitious refresh. Fluid layers, translucent surfaces, and subtle motion effects now permeate iOS 26, iPadOS 26, macOS Tahoe, and visionOS. It’s elegant. It’s futuristic.

But it’s also a bit… superficial.

Design polish is welcome—especially after years of stagnation in macOS and iPadOS. The new multitasking tools on iPad are arguably the most meaningful upgrade in years.

We finally have Exposé-style tiling, real resizable windows, and a Mac-like Menu Bar. Power users, rejoice.

But for all the visual flourish, the deeper issue remains: Apple’s GenAI story still feels incomplete.

Apple Intelligence: Clever, Not Revolutionary

Apple Intelligence—Apple’s long-awaited answer to the GenAI moment—isn’t vaporware this time. It exists. It works across iPhone, iPad, and Mac. It can summarize content, suggest replies, generate emojis and images, and even run automations in Shortcuts.

But it’s still very much a controlled demo.

Smart? Yes. Private? Definitely.

But compared to what we’ve seen this year from OpenAI, Google, and Microsoft, it feels like Apple is tinkering with assistant features rather than redefining how we interact with devices.

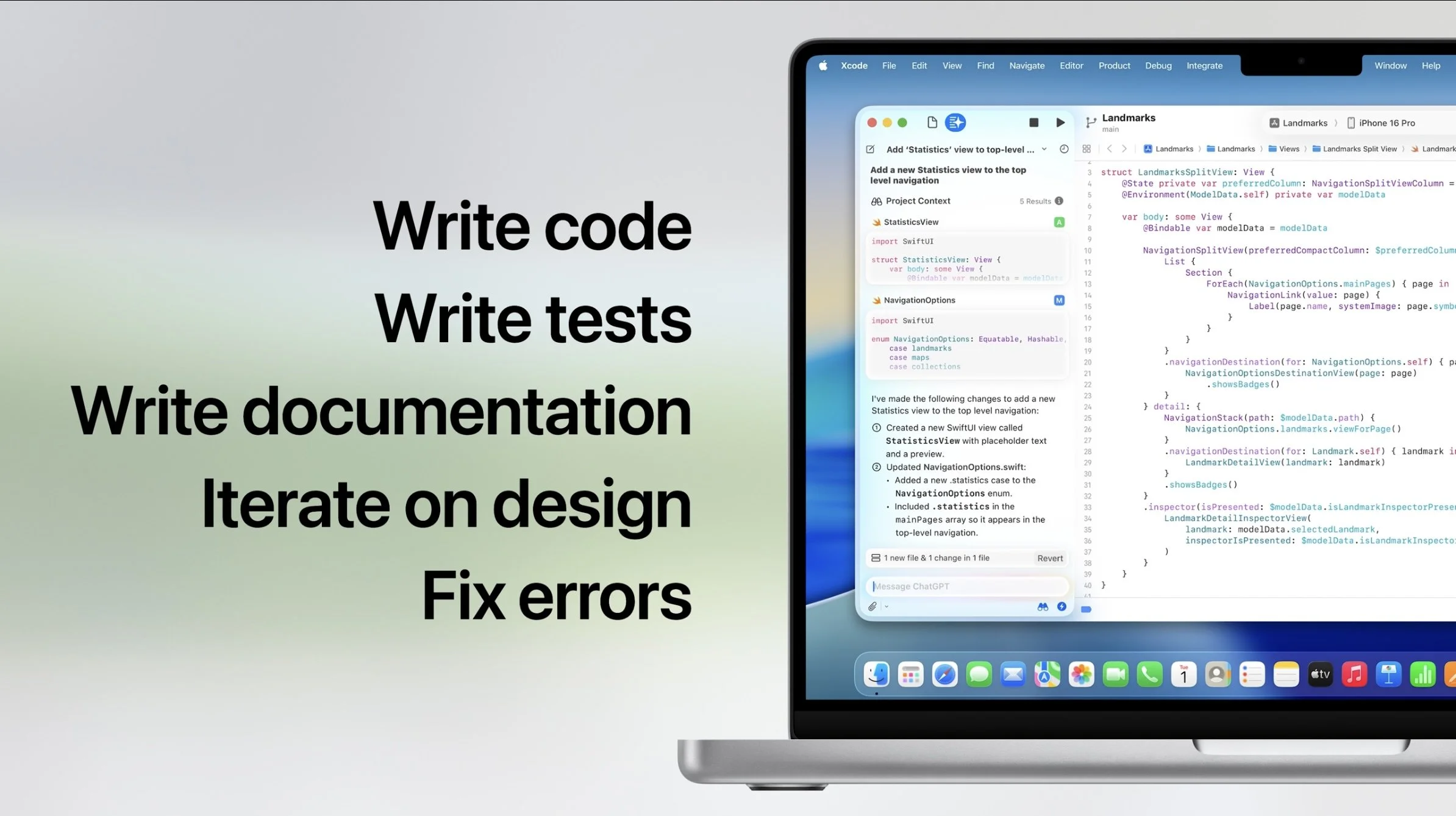

We didn’t see Apple lean into creativity tools powered by AI the way Adobe, Notion, or even Canva have. No document co-editing. No AI-powered IDEs (though Xcode 16 does get a ChatGPT-powered assistant). And still no sign of a unified assistant like ChatGPT-4o or Google Gemini Live.

Why? Because Apple isn’t building an AI-first world. They’re building an Apple-first one.

Developer-Centric, But Cautious

Instead of betting the house on generative AI, Apple has opened up the sandbox: the new Foundation Model API allows developers to build apps that tap into Apple Intelligence—on-device, privacy-preserving, and consistent with Apple’s values.

In theory, this is powerful. It gives developers a secure way to integrate AI without sending user data to third-party clouds. But in practice, it also offloads the heavy lifting to the ecosystem. Apple’s approach seems to be: we’ll build the rails, you run the train.

The question is: will developers take the bait? And will Apple’s architecture be nimble enough to support truly ambitious AI applications when the rest of the world is experimenting live and at scale?

The Gruber Snub: Signals We Shouldn’t Ignore

Traditionally, Apple closes out WWDC Day 1 with a visit to The Talk Show with John Gruber—a tradition that serves as both PR victory lap and informal developer debrief.

Not this year.

Apple declined to appear on Gruber’s show, and that sends a strong message: they’re not ready to face the hard questions. Whether it’s the delay in their AI roadmap, missteps with Vision Pro adoption, or frustrations around App Store policies, Apple seems to be insulating itself at a time when transparency would go a long way.

For a company that prides itself on storytelling, this silence was loud.

So, Where Does Apple Go From Here?

To Apple’s credit, they delivered what they do best: a cohesive vision, refined platforms, and developer tools that feel deeply integrated. The keynote showed a company still obsessed with control, design, and end-to-end polish.

But the world has changed.

We’re in an era where GenAI isn’t just a feature—it’s the new operating system for productivity, creativity, and communication. Apple’s reluctance to embrace that reality head-on feels increasingly out of sync with the broader tech narrative.

The developer tools are in place. The Foundation Model API exists. But unless Apple pushes harder on using these tools in transformative ways—or empowers developers to do so without friction—its ecosystem risks falling behind.

Final Thoughts

Apple is still Apple. They’ll always prefer depth over speed, polish over hype. But WWDC 2025 made one thing clear: the company is now on the defensive in the GenAI conversation.

The real test isn’t what they announced today—it’s what gets built in the next 12 months because of today.

And if Apple won’t lead the AI wave themselves, they better hope their developers do it for them.